How it works

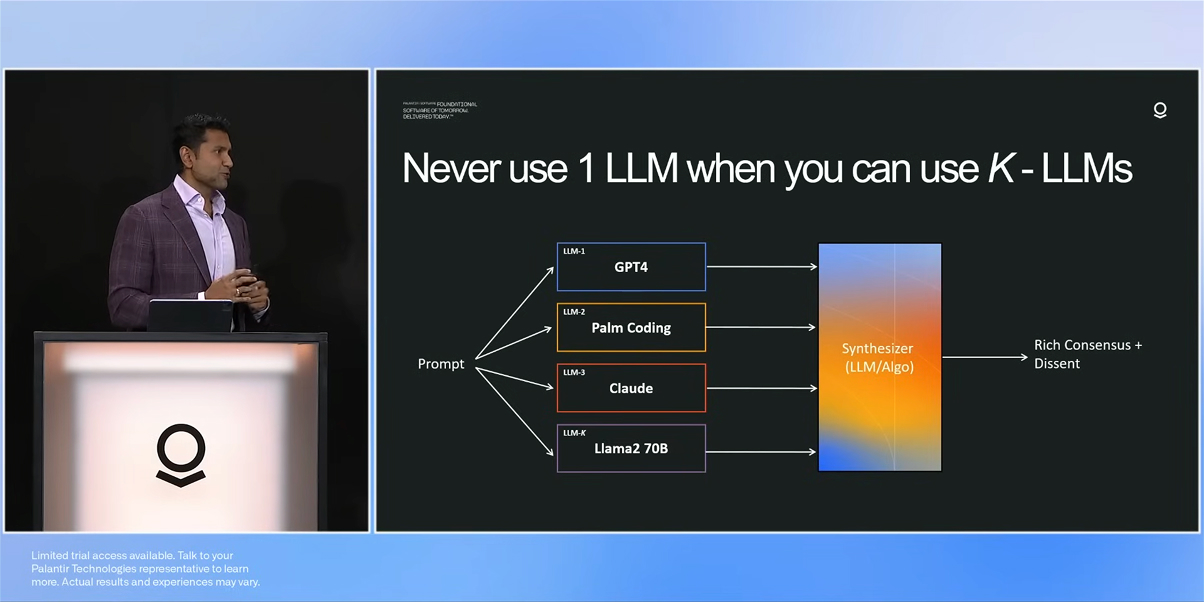

Parallel execution

Send the same prompt to multiple LLM instances simultaneously.

Parse responses

Parse each response into structured data using your schema.

Consensus merge

Merge responses using majority voting and confidence scoring.

Basic usage

k-llms implements the K-LLMs methodology where multiple models evaluate the same prompt. Results are automatically reconciled into a single output with confidence scoring.

Works with any OpenAI-compatible models. Supports structured output validation with Pydantic models.

from k_llms import KLLMs

from pydantic import BaseModel

client = KLLMs()

class Event(BaseModel):

name: str

date: str

participants: list[str]

response = client.chat.completions.parse(

model="gpt-4.1-nano",

messages=[{"role": "user", "content": "Extract event info: Alice and Bob are going to a science fair on Friday."}],

response_format=Event,

n=4, # Run 4 parallel calls

)

print("Content:", response.choices[0].message.content) # Event dict as a json string

print("Parsed:", response.choices[0].message.parsed) # Event object

print("Likelihoods:", response.likelihoods) # Confidence scores

for i in range(1, len(response.choices)):

print(f"Choice {response.choices[i].index}: {response.choices[i].message.content}")

Why k-llms?

Enterprise-grade LLM consensus for everyone

k-llms gives you the state-of-the-art multi-model strategy popularized by Palantir's k-llm's approach with a single API call.

By using consensus voting across multiple models, you get a consistent result with higher accuracy, fewer hallucinations, and field-level confidence scores that help quantify uncertainty.