TL;DR

Palantir introduction of its “k-LLMs“ architecture for it Artificial Intelligent Platform (AIP) proved the consensus pattern scales to Fortune 100 operations.

"You send the entirety of each of the responses to a synthesis stage to understand, rate, and compare the answers you've gotten."

(Shyam Sankar, CTO of Palantir)Retab’s “k-LLMs” layer for document processing helps achieving up to 99 % extraction precision on messy files (PDFs, Excels, emails, etc.).

Palantir’s Enterprise Validation

In 2024, Palantir’s AIP introduced a LLM Multiplexer that “builds consensus across multiple models”—the same pattern Retab has been using under the hood since 2023, proving the path we decided to explore right.

The feature lets operators blend GPT, Grok, Claude, and other models, then route the winning answer straight into live agents for logistics, insurance, and defense use-cases.

The takeaway: consensus isn’t just academic; it’s now a core primitive inside one of the most security-sensitive production stacks on the planet.

Why Consensus Matters in Document Processing

Large language models are non-deterministic—the same prompt can return different answers each time.

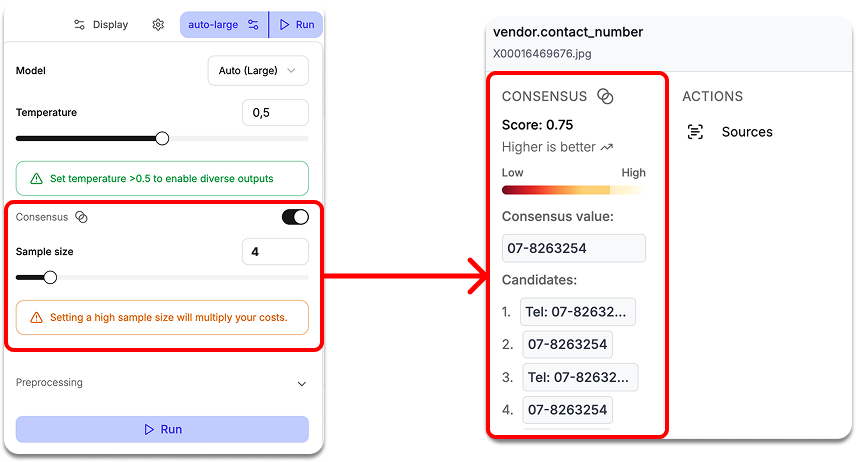

Consensus reduces this variability by sending the prompt through K independent model calls and merging their JSON outputs into one result.

Our algorithm then compares those outputs field-by-field and scores the uncertainty of every field by averaging the pair-wise distance between answers. The smaller the distance, the higher the likelihood.

On Retab’s platform this signal exposes ambiguous user queries. When the calls disagree, it means the prompt can be interpreted in several ways, so we need to tighten the schema—clearer descriptions, less-ambiguous names, stricter types—before moving on.

These field-level likelihoods also lay the groundwork for an agent that can refine schemas autonomously, even without human supervision.

Anatomy of Retab’s k-LLM Layer

How Consensus Works

Under the hood Retab:

Fires n_consensus identical calls.

Parses each raw answer into a Pydantic model / JSON‑Schema object.

Runs a deterministic reconciliation strategy:

Exact match vote for scalar fields (strings, numbers, booleans)

Deep merge for arrays when all models agree on length and order

Recursive reconciliation for nested objects

Returns the reconciled object in response.output_parsed (Responses) or completion.choices[0].message.parsed (Completions).

If any response fails JSON validation the call is retried at most once; after that a ConsensusError is raised.

rom retab import Retab

import json

load_dotenv() # You need to create a .env file containing your RETAB_API_KEY=sk_retab_***

client = Retab()

response = client.documents.extract(

documents=["../assets/code/invoice.jpeg"],

model="gpt-4o-mini", # or any model your plan supports

json_schema=Invoice.model_json_schema(),

temperature=0.5, # you need to add temperature

modality="text",

n_consensus=5 # 5 models will run in parallel

)k-LLMs Open-source Library

We built a , a wrapper around the OpenAI client that adds consensus functionality through the n parameter.

We provide a quick, type‑safe k-llms library wrapper around OpenAI Chat Completions and Responses endpoints with automatic consensus reconciliation.

Basic usage:

from k_llms import KLLMs

from pydantic import BaseModel

client = KLLMs()

class CalendarEvent(BaseModel):

name: str

date: str

participants: list[str]

completion = client.chat.completions.parse(

model="gpt-4.1",

messages=[

{"role": "system", "content": "Extract the event information."},

{"role": "user", "content": "Alice and Bob are going to a science fair on Friday."},

],

response_format=CalendarEvent,

n=4

)

print("Consensus output:", completion.choices[0].message.parsed)

print("Likelihoods:", completion.likelihoods)

for i in range(len(completion.choices)):

print(f"Choice {i}: {completion.choices[i].message.parsed}")Performance Gains in the Wild

Using k-LLM on your iterations helps increase drastically the performance scores:

Invoices (3 pages): 92% → 99.1% accuracy score.

Hand-written claims: 78 % → 94.7 % accuracy score.

SEC 10K tables: 85% → 96.3% accuracy score.

Note that as cost roughly scales linearly with k, methods such as shadow-voting (i.e. anchoring a single “premium” such as gpt-o3 that you trust for quality, before filling the remaining k − 1 seats with cheaper open-weight models such as the latest gpt-oss-20B/120B) retains most of the lift at ~40 % of the price.

FAQ

Is consensus still worth it with GPT-4o?

Yes. Ensembles add 4-6 pp accuracy on semi-structured docs even with frontier models.

How is this different from RAG?

k-LLM is a generation-time ensemble; Retrieval-Augmented Generation enriches the prompt. They stack beautifully.

Will five calls explode my budget?

Use smaller models for four votes and let the premium model arbitrate ties.

Conclusion

Consensus and Trust building imply time-consuming Iterations.

Retab’s Evaluation mode lets teams measure that lift before they commit to it: upload a labeled corpus, toggle single-shot versus k-LLM ensembles, and watch precision-recall, latency, and cost curves populate in real time. Heat-maps highlight the fields with the widest model disagreement; one click drills down to the raw completions so you can debug or tighten the schema. When the metrics clear the thresholds you set, promotion to production is literally a switch—no code rewrite required.

Don't hesitate to reach out on X or Discord if you have any questions or feedback!